Code

from pyspark.conf import SparkConf

from pyspark.context import SparkContext

conf = SparkConf().setAll([('spark.app.name', '2_test_sparksession'),

('spark.master', 'spark://spark-master:17077'),

('spark.driver.cores', '1'),

('spark.driver.memory','1g'),

('spark.executor.memory', '1g'),

('spark.executor.cores', '2'),

('spark.cores.max', '2')])

sc = SparkContext(conf = conf)

for setting in sc._conf.getAll():

print(setting)

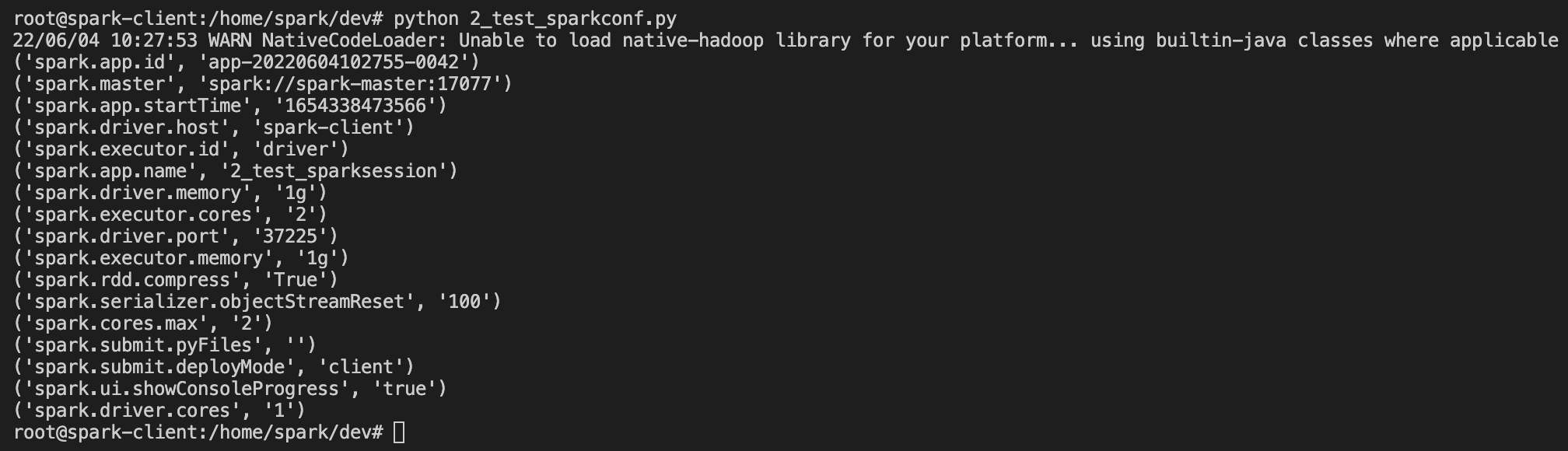

sc.stop()Result

('spark.master', 'spark://spark-master:17077')

('spark.driver.host', 'spark-client')

('spark.executor.id', 'driver')

('spark.driver.memory', '1g')

('spark.executor.cores', '2')

('spark.driver.port', '36905')

('spark.app.id', 'app-20220604103240-0045')

('spark.executor.memory', '1g')

('spark.rdd.compress', 'True')

('spark.app.name', '2_test_sparkconf')

('spark.cores.max', '2')

('spark.app.startTime', '1654338757957')

('spark.serializer.objectStreamReset', '100')

('spark.submit.pyFiles', '')

('spark.submit.deployMode', 'client')

('spark.ui.showConsoleProgress', 'true')

('spark.driver.cores', '1')'Data Engineering > Spark' 카테고리의 다른 글

| [Spark] Compare user settings in pyspark code (0) | 2022.06.04 |

|---|---|

| [Spark] To output the default settings for a Spark Session (0) | 2022.06.04 |

| [Spark] How to adjust spark memory in pyspark code (0) | 2022.06.04 |

| [Spark] pyspark 코드에서 spark master host 변경하는 방법 (0) | 2022.06.01 |

| [Spark] pyspark 코드에서 어플리케이션 이름 변경하는 방법 (0) | 2022.06.01 |