data = {

'parent': [{

'id': 'id_1',

'category': 'category_1',

}, {

'id': 'id_2',

'category': 'category_2',

}]

}

df = spark.createDataFrame([data])

df.printSchema()

df.show(truncate=False)

df = df.select(explode(df.parent))

df.printSchema()

df.show(truncate=False)

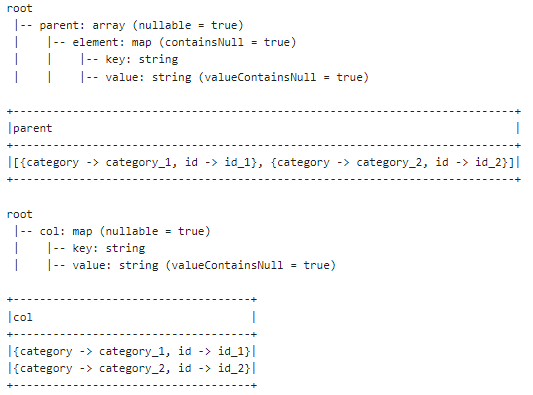

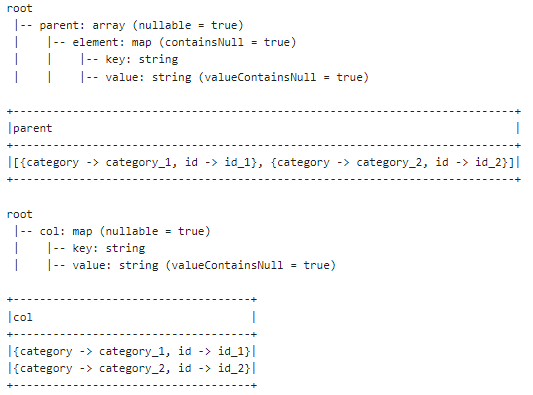

root

|-- parent: array (nullable = true)

| |-- element: map (containsNull = true)

| | |-- key: string

| | |-- value: string (valueContainsNull = true)

+----------------------------------------------------------------------------+

|parent |

+----------------------------------------------------------------------------+

|[{category -> category_1, id -> id_1}, {category -> category_2, id -> id_2}]|

+----------------------------------------------------------------------------+

root

|-- col: map (nullable = true)

| |-- key: string

| |-- value: string (valueContainsNull = true)

+------------------------------------+

|col |

+------------------------------------+

|{category -> category_1, id -> id_1}|

|{category -> category_2, id -> id_2}|

+------------------------------------+